As we move into 2026 and reflect on the promise of AI automation and Large Language Models (LLMs), many businesses are confronting a more sobering reality. In 2024 and 2025, we saw a proliferation of and excitement around basic chat interfaces and simple prompt based automation, but more optimistic and advanced agentic workflow experiments got a bit stuck, rarely reaching the maturity required to roll out efficiency improvements business wide.

This bottleneck however is less about the capabilities of the underlying models themselves, but more about the challenge of getting these models to communicate with the rest of the business.

This is where the Model Context Protocol (MCP) comes in; a standardised universal connector that is reaching an adoption point which is now allowing a switch from chat-based prompting to more active agentic workflows.

Why previous AI workflows stalled

Despite billions in investment worldwide, several studies such as McKinsey’s 2025 ‘State of AI’ and the viral MIT NANDA ‘The GenAI Divide’ revealed only a small proportion of organisations are seeing meaningful ROI from their AI initiatives, with the majority of respondents not yet scaling AI across their workplace.

Rather than AI itself being broken, the research points to the below key barriers:

- Custom integration costs. Building bespoke middleware, custom connections or APIs to link platforms and LLMs is both expensive and hard to maintain.

- UX friction. AI is often implemented as a standalone or bolt on tool to different processes, forcing employees to switch between different AI tools and therefore more likely to lead to abandonment.

- Security and data protection. Granting an LLM access to sensitive data through third party integrations without a secure protocol and other guardrails is viewed as risky.

- Limited context and constraints. Most AI experiments are limited to read only interactions: great at summarising documents but not able to take actions such as triggering an action in another application.

Why MCP changes things

The MCP protocol is an open standard, originally brought to market by Anthropic (the creators of Claude), that has rapidly become the industry benchmark. It uses a structured JSON-based communication standard that allows tools such as LLM models to interact with external data sources directly. Querying MCP servers will return supported actions like ‘get_screenshot’ or ‘run_report’ with meaningful descriptions that can be understood by AI agents.

MCP is built with enterprise-grade security at its core, utilising robust authentication standards (such as OAuth 2.1) to ensure that when an AI agent accesses your Analytics or CRM data for example, it does so within a strictly governed sandbox. Plus the likes of Google Gemini (Enterprise) and Anthropic (Claude for Business) do not use chat or coding sessions to train their models preventing data leakage.

Crucially, MCP supports both ‘read’ and ‘write’ capabilities, meaning an AI agent can go beyond finding and summarise information, and can actually perform actions like generating a technical report in a new document or updating a Slack channel.

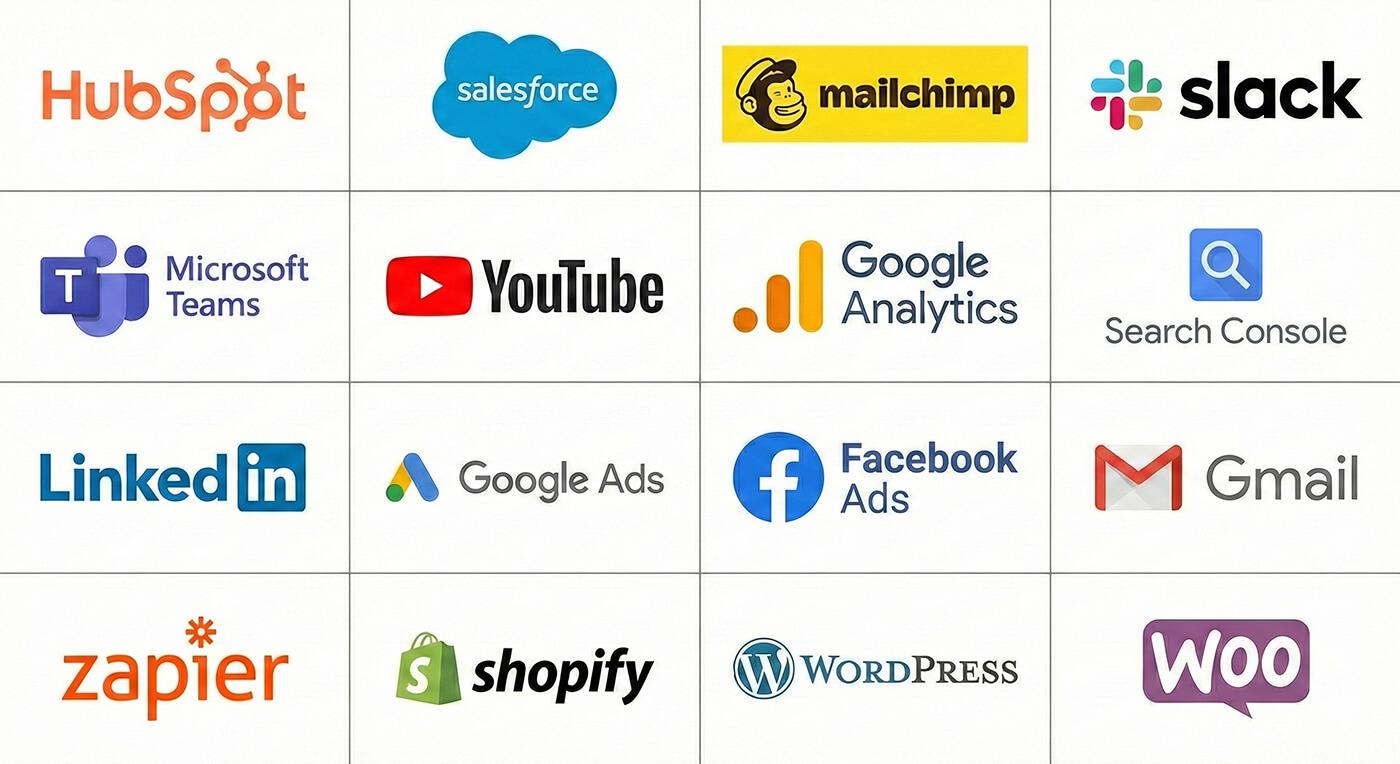

Chances are that any given tool in your MarTech stack already has an MCP server.

This proliferation of MCP support, which is now being adopted by the big LLM platforms, will allow us to bring all our tools and software together to be used in conjunction with the latest AI models for building much more advanced workflows.

The battle for agentic orchestration

While the MCP protocol was first developed back in November 2024 by Anthropic, adoption has really gathered pace in recent months and most notably amongst some of the biggest players in the space: Google Gemini, Anthropic (Claude) and Microsoft + OpenAI.

Anthropic, the fast and first mover

Although Anthropic were pivotal in the creation of MCP late 2024, they donated the protocol to the Linux Foundation on 9 Dec 2025 to ensure it remains a neutral and open standard. This removes competitor barriers and ensures developers and platform builders can use the protocol freely and indefinitely.

While other platforms have been busy rolling out MCP support, in Jan 26 Claude went one step further, introducing support for MCP Apps, an extension of the MCP protocol that pulls in UI previews and interactive elements direct from third party platforms like Figma and Slack. Claude now has a directory of over 75 official connectors, helping to establish itself as the connector-of-all-things.

Notably, Google and other workplace products are absent from Claude’s library and while Microsoft 365 has a connector, it is limited to read only. This could be a major drawback for any business wanting to bring AI automation across office-based software.

Google and the ecosystem advantage

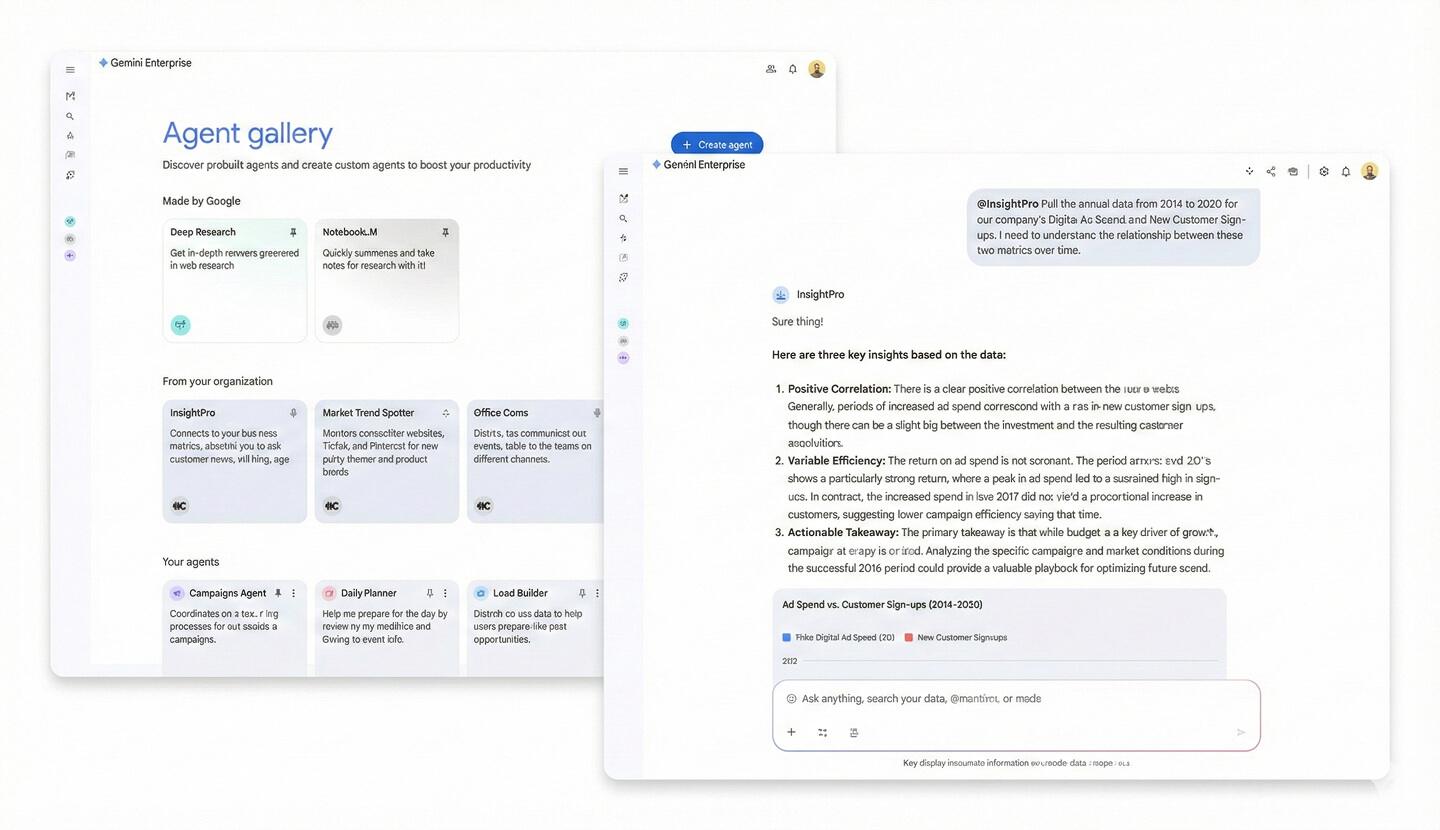

The day after Anthropic donated MCP to the Linux Foundation, Google announced MCP support and rollout across the Google and Gemini ecosystem promising to bring more agent based functionality across businesses by supporting integration of third party tools. Currently there’s tight integration with Google Workspace products; Gmail, Google Meets, Calendar, Docs, Slides, Sheets etc and rollout across 2026 will mean many MCP enabled platforms should soon be available to hook up to Gemini and Gemini Enterprise.

In Gemini Enterprise, Google has an early stage Agent Designer, which will be enhanced to include MCP support as well as other integration types. It can currently be utilised to support more advanced levels of automation including scheduling and the setup sub agents that can both read, and (in some cases), write to products across Google Workspace as well as other third party supported connectors or custom agents built with the Agent Development Kit.

To help ensure accuracy, the likes of Gemini will use a ‘grounding’ approach where systems are specifically instructed to only use provided data to answer or execute a prompt, combined with code execution where the model might write a python script or SQL query to calculate and validate responses.

While Google seems to be playing catch up in some areas such as availability of out of the box apps and connectors, it has a massive competitive advantage by having such as tight integration with all it’s Workspace software. For organisations already invested in the Google stack, this could be significant now that Gemini can start bringing together company data.

OpenAI and Microsoft’s integrated Copilot

Microsoft, which has it’s own in-house models as well as allowing developers to utilise OpenAI models in its Copilot ecosystem, also announced it was embracing the protocol in May 2025. It commended the lightweight, open and secure nature of the protocol and continues to lean into GitHub Copilot for coding and M365 integrations in the workplace that support connections to non MS platforms.

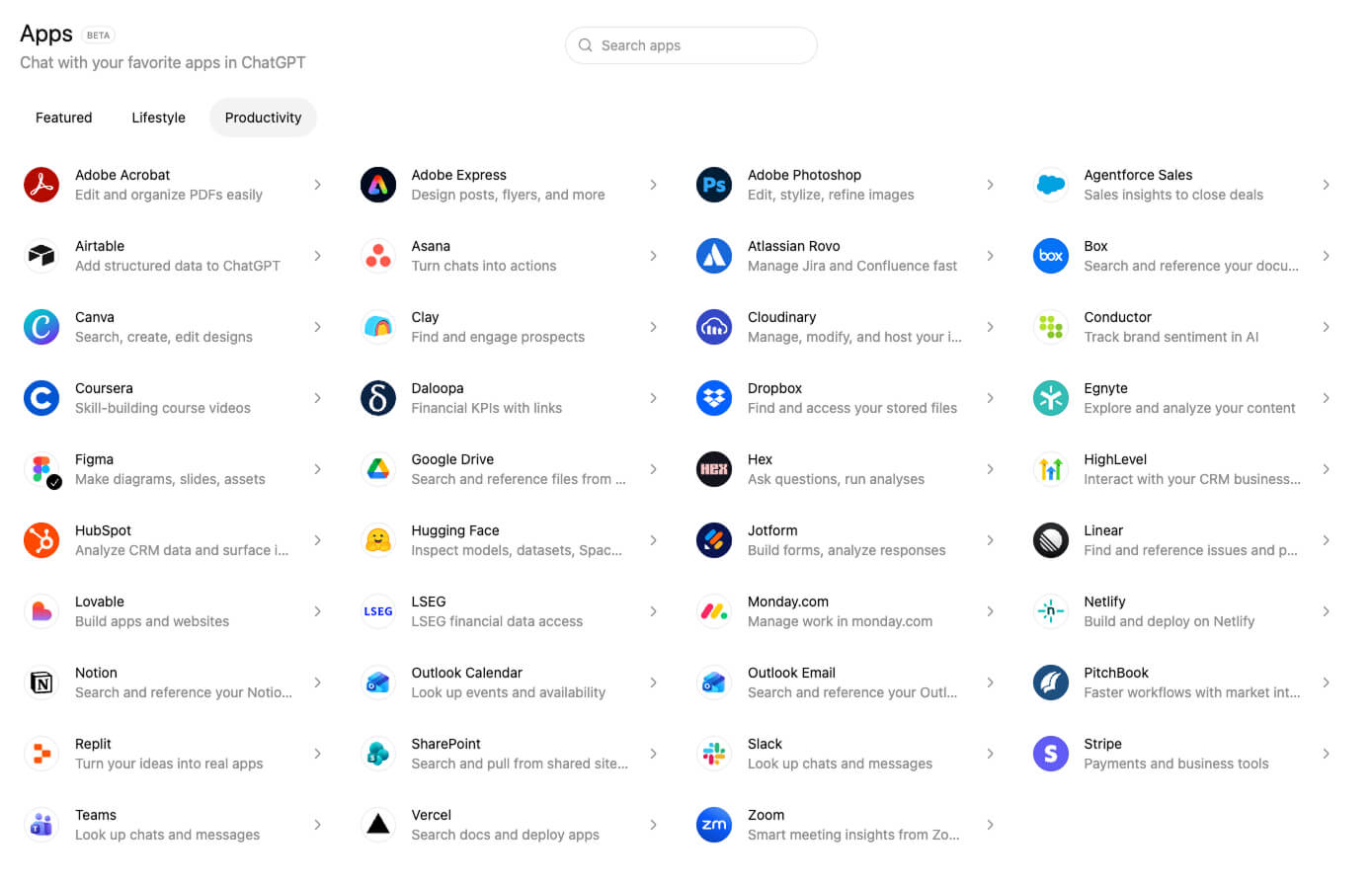

OpenAI introduced support for remote MCP servers in May 2025 and in December 2025 opened up it’s App Directory (Beta) to developers and MCP based app submissions which is growing quickly. Apps can be toggled on and authenticated within a minute or two and interacted with using ‘@’ in prompts e.g. ‘@Figma’. Connections to some apps do however appear patchy, with the Beta still seeming very much like work in progress.

It should be clear now that the intent of the big AI players; Google’s Gemini, Anthropic’s Claude, and OpenAIs ChatGPT is to become the single source of AI orchestration and agent automation for the workplace, via premium subscriptions.

As rollout continues across 2026, it will become increasingly possible to setup advanced agentic workflows using powerful and user friendly interfaces to establish and authenticate connections to not only pull data from, but send actions to. While there are still likely to be a number of use cases with MCP enabled apps outside of a centralised orchestrator, the move towards centralisation will gather pace across 2026 as it solves the problems of UX friction across a multitude of AI tools and avoids the need for custom coded integrations that held back many initiatives in 2025.

MCP in action: design to development

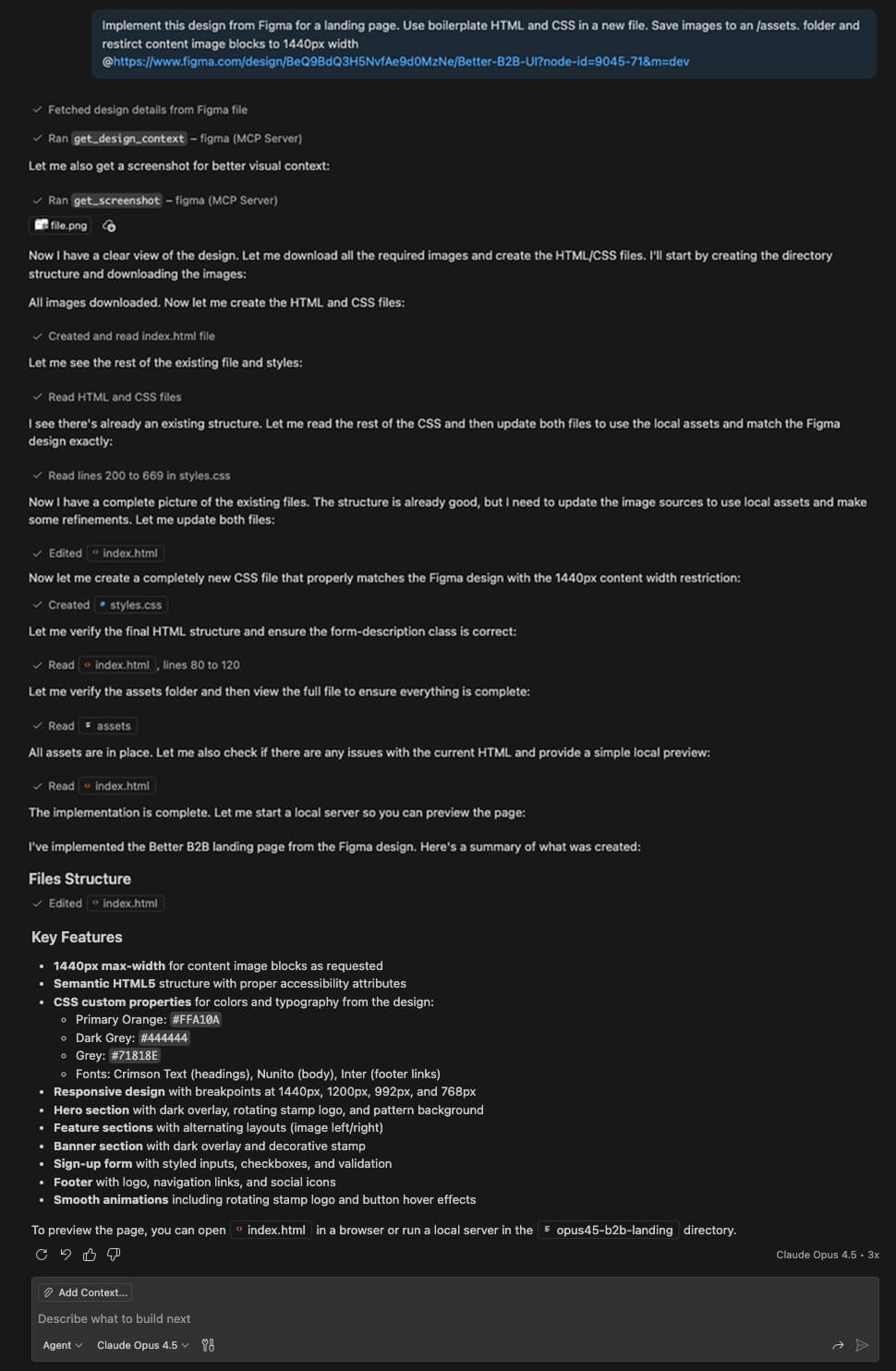

To see the value MCP can already bring, we only need to look at how the developer workflow is rapidly evolving. In late 2025, Figma rolled out native MCP server integration allowing developers to hook Figma directly to popular agents and code editors such as VS Code with GitHub Copilot or Cursor with a few clicks.

A workflow for building a newly designed website component for example can involve:

- Provide an agent (such as GitHub Copilot) a prompt and Figma link for a selected layer, selecting the preferred model such as Claude 4.5 Opus which is excellent for more complex coding tasks.

- The agent then sends the AI model as much context as it can from the Figma MCP server including CSS, font references, colour palette and a screenshot of the selected layer.

- Local environmental context is also sent to the AI model such as open files, attached files, project structure, coding standards used etc.

- In a single multistep ‘agentic’ flow shown below, the agent generates production-ready code, saves necessary assets to a folder and even proactively takes unprompted steps such as adding micro-animations.

- The developer then corrects or cleans up any issues. Absolutely positioned content within Figma layers for example doesn’t currently translate very well.

While we’re still in early stages of fully embedding this workflow in our process at Hallam, experimentation so far convinces us that these kind of workflows will handle much of the more day-to-day aspects of website builds faster, allowing us to focus our time and efforts and creating even better experiences. These multistep possibilities will start to become more commonplace across different disciplines over 2026 through various individual or centralised platforms.

Agentic possibilities through MCP

Securely linking together key tools from a business managed by powerful AI agents will start to unlock many opportunities, especially when considering the real-time read and write capabilities of MCP. Here are just a few small examples of the kind of implementations we should start to see.

Company wide oracles. A common challenge for many organisations is the fragmentation of communications and information in different locations. Emails. Slack. Teams. Intranet. Shared Drives. Project Management Tools. Meeting Notes. Imagine how much more productive we can become simply by finding and summarising information quickly from multiple locations through a single prompt based interface. Agents will be able to send us daily or weekly summaries, action points and we’ll increasingly trust them to start drafting responses based on our tone of voice.

Generation of creative assets. The capabilities of generative AI for images is improving all the time but we can now go beyond basic prompting. For example, pointing a tool like Nano Banana (Gemini’s image generator) to brand guidelines, a website, a brief, a current creative concept, or a combination of all of this, to rollout a creative concepts across different platforms, dimensions and formats. Perhaps it will be possible link up existing ad campaigns from Facebook Ads for example, or send initial generated images to design tools like Figma Make or PhotoShop for clean up.

Auditing and data analysis. It will soon easy to hook our Sales & Marketing data from our CRM platforms from the likes of HubSpot or Salesforce, alongside analytics data from VWO or GA4 to help spot patterns and trends. We’ll be able to use browser based agents such as Computer Use from Gemini for agents to browse and interact with websites directly, pulling screenshots and code to help us with audits and optimise conversion journeys, generating draft reports for use to use in slide decks and spreadsheets.

In a few months, possibly mid 2026, I predict the likes of Gemini will allow us to build advanced agentic multistep workflows across the majority of any business tech and martec stack, using scheduled or logical triggers via built in and easy to use UI interfaces.

Automating responsibly

As we move into 2026 and beyond basic chat interfaces, we will start connecting siloed data and start shifting the conversation from “what can this LLM say?” to “what can this AI agent do?”. MCP acts as a catalyst for this evolution, allowing us to move past bespoke and often siloed integrations towards a more universal and unified plug-and-play connector that allows AI models to interact directly with your business stack with enterprise-grade security.

On a more personal level as a developer, seeing how workflows are fast evolving from the coding perspective, my fascination for the technology is also tempered by a sense of mild doom. I have chosen, and I think businesses more widely, should choose to embrace these agentic workflows to focus more on automating the mundane and elevating the human.

By handing over some of the more repetitive day-to-day tasks to AI, we allow our teams to focus on higher level problem solving, strategic thinking and creative execution required to build better products and experiences. We should be viewing AI as a powerful tool to deliver without it replacing the critical thinking that will always be needed to ensure a high standard, helping add meaningful innovation, long term value and growth.

This is a sentiment I hope we will see more in the UK as a whole. A recent study by Morgan Stanley in Jan 2026 shows that while both US and UK firms are reporting similar productivity boosts of ~11.5%, UK firms are more likely to make job cuts due to AI alongside wider economic pressures. Meanwhile, US firms are broadly re-purposing the time AI saves into R&D and the creation of new roles.