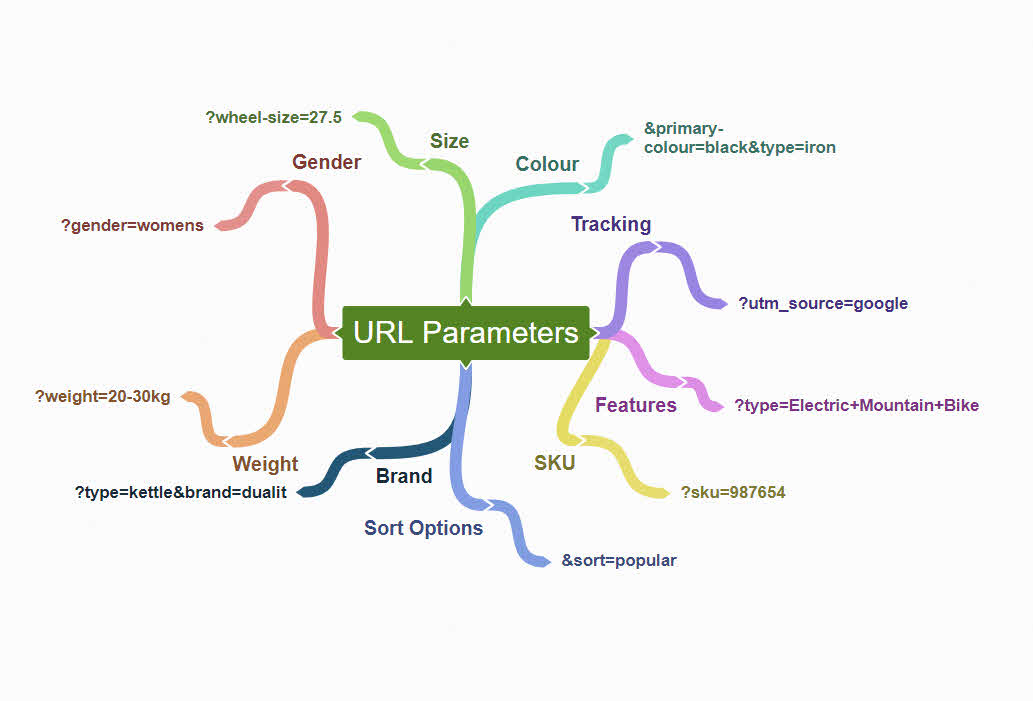

Website URL parameters are commonly used for tracking session IDs, product category page filters, powering search queries and more. Parameters can be valuable but do confuse search engines, resulting in page indexing issues and wasted crawl budget. So how do you avoid this? We take a look below and cover the following.

SEO Pitfalls of URL Parameters: Contents

- Common parameter issues

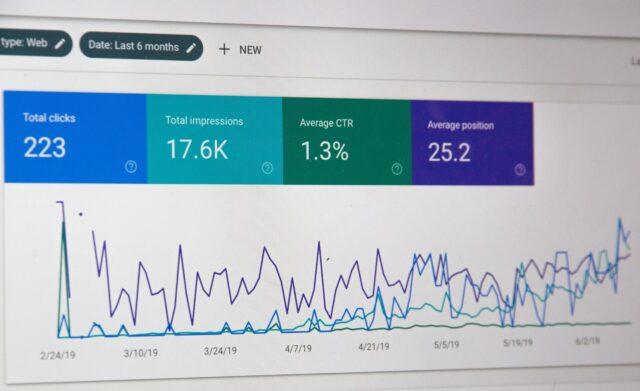

- Example of when parameter have impacted on performance

- SEO options to address parameters

- Identifying if you have a parameter problem

Why do URL structures matter?

URLs help search engines to understand the content and structure of a site. A concise and descriptive page URL is also more likely to get click from the search engine results. This is not surprising as a lengthy and parameter packed URL is harder for the human brain to process and interpret.

1. Common issues with URL parameters

Incorrrectly configured URL parameters can cause of range of issues, from creating duplicate content to keyword cannibalisation and wasting precious crawl budget.

Let’s take a look at the most common issues arising from URL parameters.

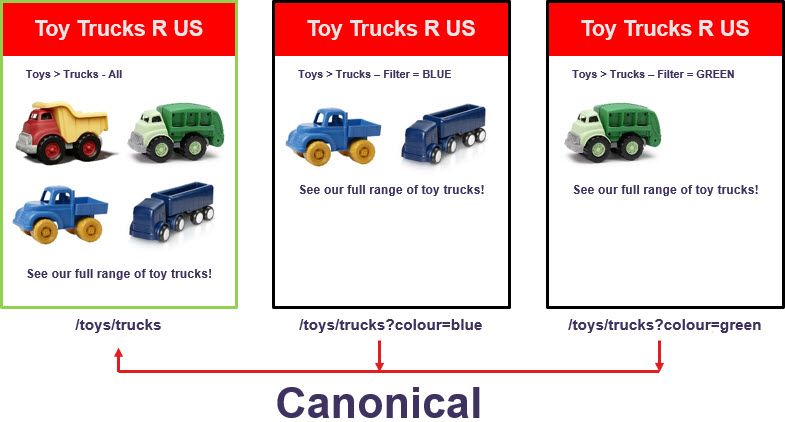

Duplicate content

The generation of URL parameters based on website filters can create serious on-page issues that can impact the page rankings of websites and particularly e-commerce sites. If your website enables users to sort content by price or feature (colour, size etc.), and these options aren’t actually changing the content of the page but just narrowing the results; then this could hamper your website’s performance.

The following URLs would, in theory, all point to the same content: a collection of bikes. The only difference here is that some of these pages might be organised or filtered slightly differently. The URL parameter part begins with a question mark.

http://www.example.com/products/bikes.aspxhttp://www.example.com/products/bikes.aspx?category=mountain&color=bluehttp://www.example.com/products/bikes.aspx?category=mountain&type=womens&color=blue

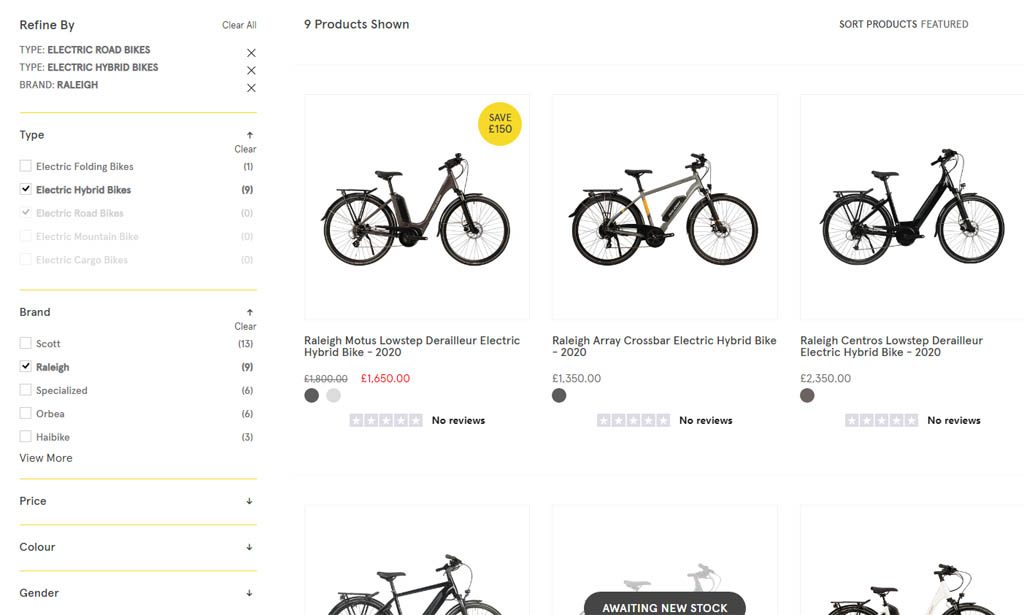

The examples shown above are typical of an e-commerce website’s faceted navigation. Faceted navigation systems are the filter options that allow you to narrow down the product type or other attribute using pre-defined filter options. This ultimately helps you to find what you want but it can lead to problems when parameters are used.

It is helpful to users to be enable them to narrow down brands, product types and sizes on e-commerce sites, but it can give search engines a headache if not managed correctly.

Keyword cannibalisation

Keyword cannibalisation occurs when multiple pages on a website target the same or similar keywords. In this situation search engines can struggle to determine which is the most appropriate page to rank for a particular search query. At best this can can lead to the “wrong” or “undesired” page ranking for that term or it not ranking as well or even at all.

Wasted Crawl Budget

Search engines could end up not being able to efficiently crawl your website if there are lots of parameter based URLs available to be indexed. If you think about the different filtering options and the different combinations then it is easy to see how issues do occur. I have seen websites with 50+ parameter versions of a single page, that have struggled to be indexed and failed to rank as a result.

A few of the different URL filtering permutations are listed below to give you an idea of how quickly search engines can be presented with 1000’s of unnecessary pages to crawl.

Static URL: www.example.com/dining-furniture

Search URL: www.example.com/?q=dining-furniture

Brand Filter URL: www.example.com/dining-furniture?brand=stressless

Colour Filter URL: www.example.com/dining-furniture?colour=brown

Product Type Filter: www.example.com/dining-furniture?type=recliner=stressless

Product Type & Brand Filter: www.example.com/dining-furniture?type=recliner=stressless

2. Examples of Parameter Problems: When it All Goes Wrong

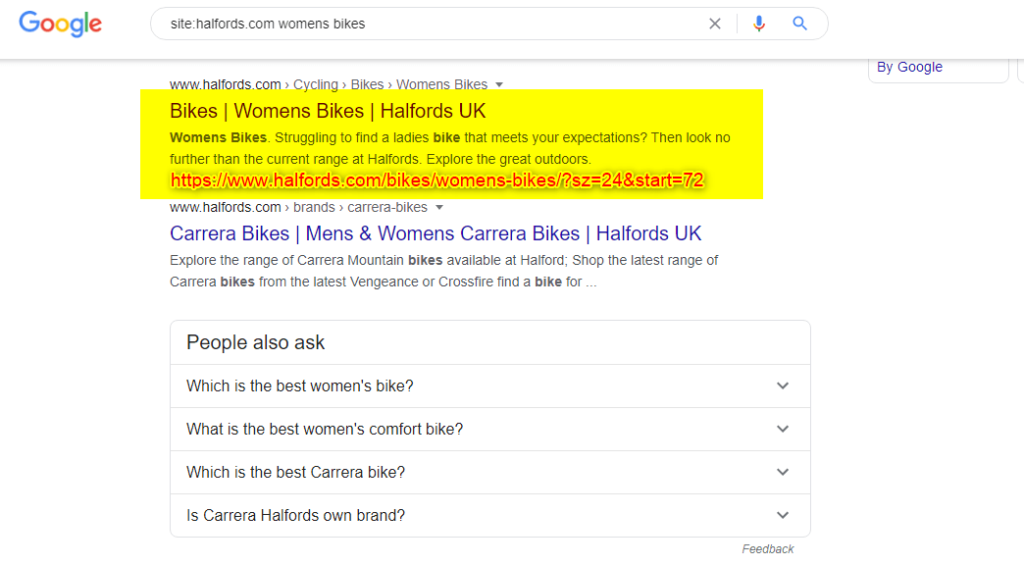

When I originally wrote this post, I discussed the problems with the Halfords (www.halfords.com) website that they were having due to parameters. I am pleased to say that the issues have mostly been resolved and that their category pages rank better than before, but the site still has some issues.

The Halfords.com website used URL parameters to dynamically serve product and search filter results for types of bikes, brands, sizes, styles or kids or adult audiences. The problem for Halfords was that their site didn’t deal with the numerous dynamically generated URL parameters page which ultimately, creates competing category pages for Google to index.

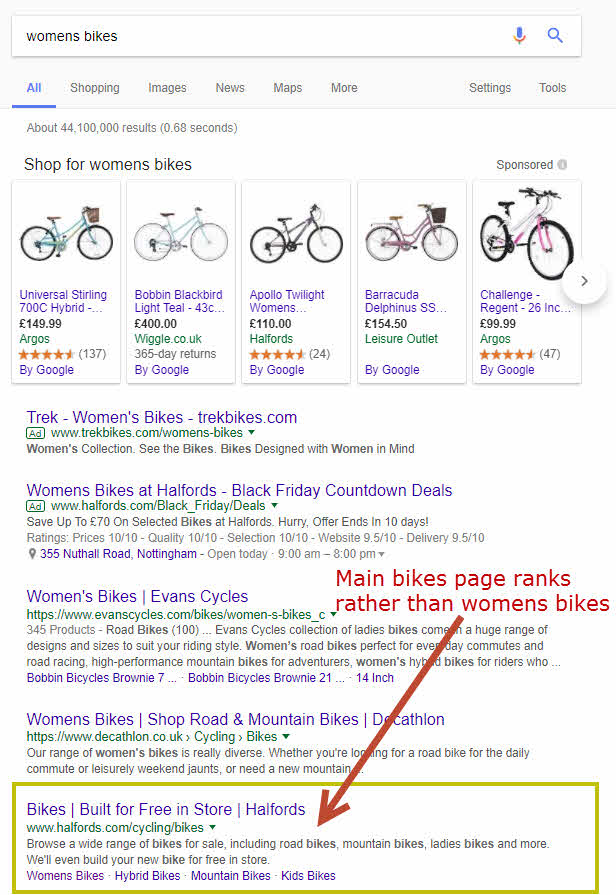

To illustrate how parameter URLs harmed organic Halford’s performance, the screenshot below shows Google’s search results for “women’s bikes” at the time. For this search, Halfords’ main bike category appeared in the search results rather than their women’s bikes category page.

Decathlon and Evans Cycles women’s bikes category pages ranked, which makes sense as these pages best matched the search query “women’s bikes”. Unfortunately for Halfords, they had failed to address the numerous duplicate parameter pages for the keyword phrases which resulted in Google not ranking their women’s bikes page.

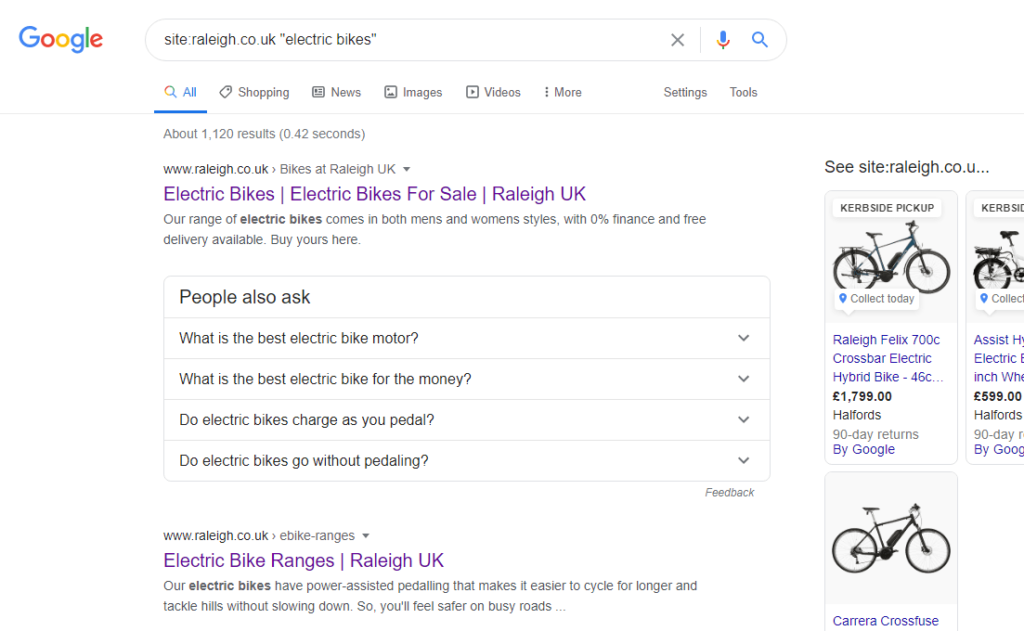

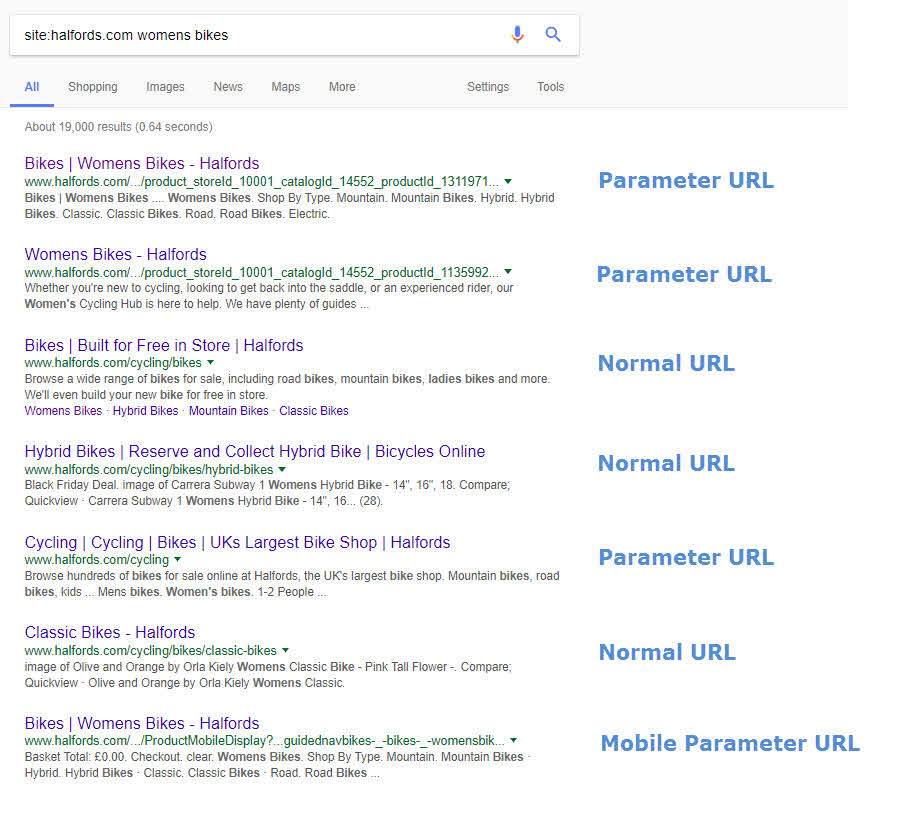

To understand the extent of Halfords’ problems, I ran a site command search on the Halfords domain (site:halfords.com women’s bikes) to identify competing pages for the term women’s bikes. The search results for this query revealed why the wrong page was ranking.

There were numerous duplicate parameter URLs for women’s bikes and the screenshot above includes two results with product_storeId_10001_catalogId_14552_productId_ in the URL.

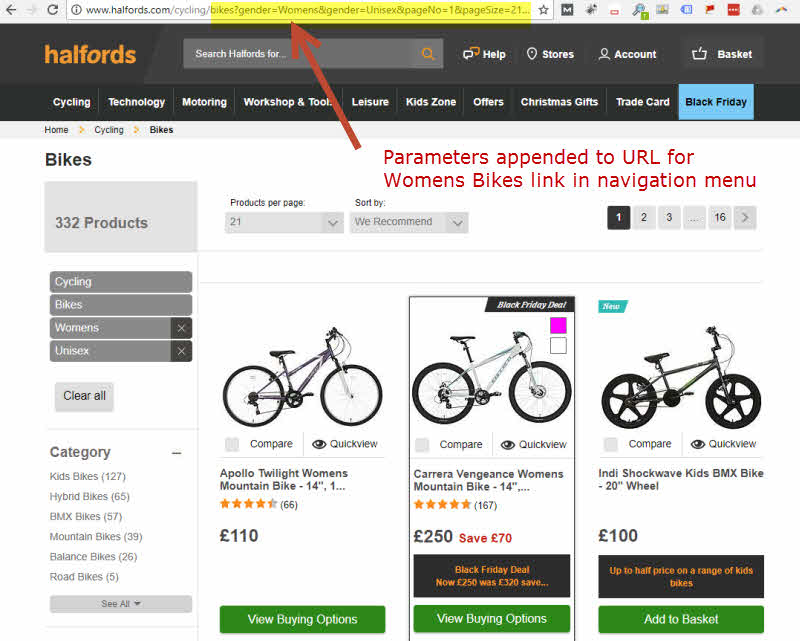

It was possible to find even more URLs available for women’s bikes queries on the website. The link within the main navigation menu took you through to a women’s bike page powered by parameters.

The product category filter options and additional search filter options created further pages that were competing for the same term. Halfords missed out on organic traffic because they were not using technical SEO techniques to avoid parameter issues to help Google to understand which pages to index.

Ideally, your pages should use a search engine friendly URL structure, but you can still use parameters as long as you take the necessary steps to avoid duplication and indexing issues.

The fact that Halfords had multiple pages for women’s bikes with variations of women’s bikes in their page titles and headings, performance held them back. This is a classic example of where a website is simply confusing search engines by having two or more pages covering the same topic, meaning search engines will be unsure of which page to rank.

Do Halfords still have a parameter problem?

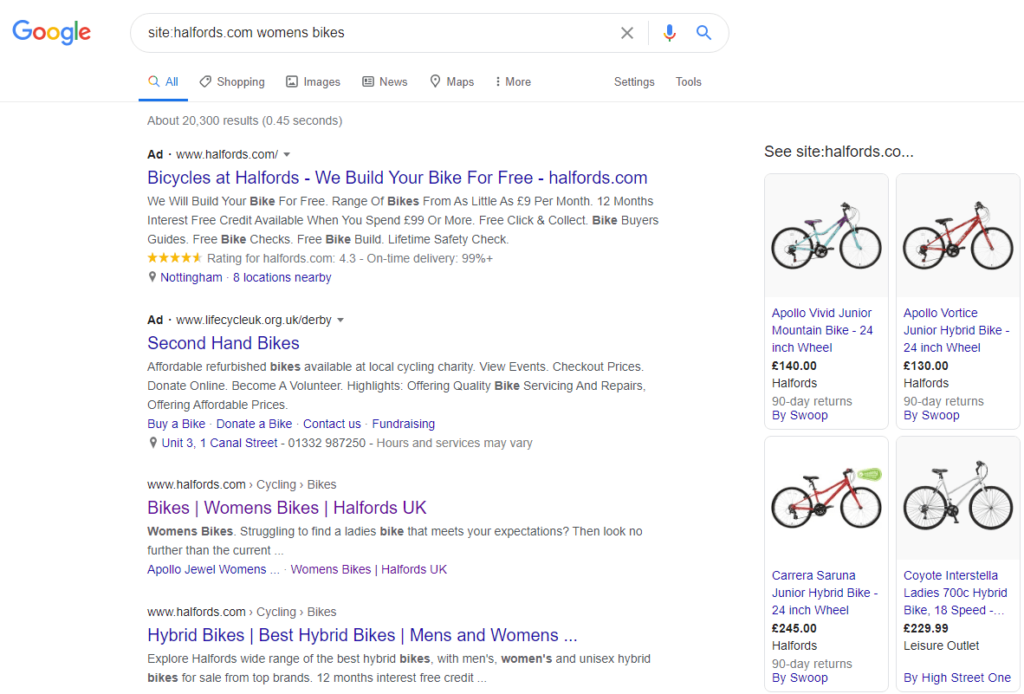

If we look at the site now using the same site command for site:halfords.com “womens bikes”, we can see that Halfords is ranking well for this term.

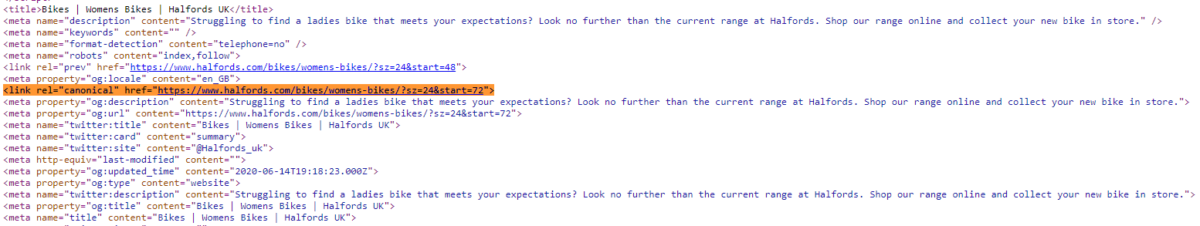

However, if we scroll down the search engine results we can see that the parameter problem that they had before has not completely gone away as the parameter page is still in Google’s index.

So why is this? If we look at the source code of the women’s bikes parameter page it includes a self referencing canonical tag rather than telling Google that the main women’s bikes page is the page to index.

The next section explains how to resolve such issues and how to identify them.

3. How to identify and avoid pitfalls associated with URL parameters

There are a number of solutions to ensure URL parameters don’t cause any SEO issues on your website. But before you rush in and implement any of the fixes below, you need to check whether URL parameters could be causing your website problems by asking the following questions:

- When using a search filter on your website (see faceted navigation), does the URL change and the copy remain the same as the copy on the original URL?

- When using a search filter on your website, does the URL change and the page title and meta description remain the same or contain the same target keyword?

If you answered yes to one or both of these, URL parameters could be holding back the performance of your site in organic search, and it might be time to take action.

There are site crawl tools that you can use to determine where and how parameters are used on your site. A list of some of the common tools that you can use is below:

- Screaming Frog SEO Spider crawl tool. Free version allows you to crawl 500 URLS. The paid for version enables you to set the crawl user agent to Google and also crawl unlimited URLs

- Ahrefs Site Audit tool – Included as part of a monthly subscription which starts at $99 a month

- Deepcrawl – Powerful cloud crawl software suitable for very large ecommerce sites.

Canonical tags

Canonical tags are used to indicate to search engines that certain pages should be treated as copies of a certain URL, and that any rankings should actually be credited toward the canonical URL.

Web developers can set the canonical version of a piece of content to the category page URL before a filter is applied. This is a simple solution to help direct search engine robots toward the content you really want to be crawled, while keeping filters on the site to help users find products closely related to their requirements. For more information on implementing rel=”canonical” tags, see our guide on how to use canonical tags properly. We have also written a guide on how to implement canonical tags on Magento e-commerce sites.

4. How to identify if you have a parameter problem

One way to check whether this is the case for your site is to test some of the filters available on one of your product category pages to assess whether the content changes significantly after the products have been filtered. For example, let’s imagine that the content of an original category page on a cycling website included a paragraph or two of copy to promote a specific type of bike. Then, when filters were applied using the faceted navigation to select female bikes, the URL of the page changes to include a query string (example.com/bikes?type=female) – if the majority of the page content remained the same, these pages could be classed as duplicate content by Google IF their relationship isn’t made clear by to search engines.

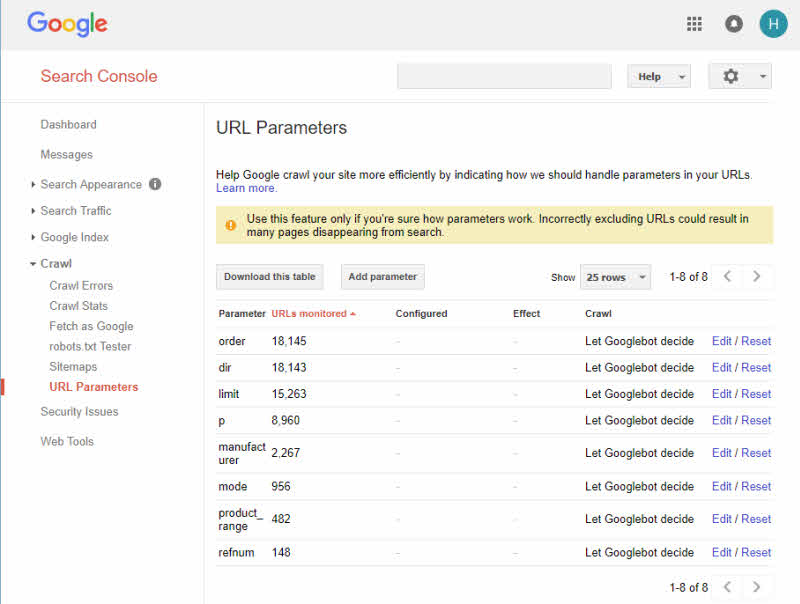

URL parameter tools

Use the URL Parameters tool within Google Search Console to give Google information about how to handle URLs containing specific parameters. Please do tread carefully when making changes as you can easily exclude the wrong pages from Google’s index which may result in a significant loss of traffic.

Bing Webmaster Central also provides a tool for ignoring URL parameters. You can find a guide on how to use this tool here.

Robots.txt – disallowing query strings

The robots.txt file can help you remedy a duplicate content situation, by blocking search query parameters from being crawled by search engines. However, before you go ahead and block all query strings, I’d suggest making sure everything you’re disallowing is something you definitely don’t want to be indexed. In the majority of cases, you can specify search engines to ignore any parameter based pages simply by adding the following line to your website’s robots.txt file:

Disallow: /*?* |

This will disallow any URLs that feature a question mark. Obviously, this is useful for ensuring any URL parameters are blocked from being crawled by search engines, but you first need to ensure that there aren’t any other areas of your site using parameters within their URL structure.

To do this, I’d recommend carrying out a crawl of your entire website using a tool like Screaming Frog’s SEO spider, exporting the list of your website’s URLs into a spreadsheet and carrying out a search within the spreadsheet for any URLs containing question marks (?).

Common things to look out for here would be the use of URL parameters to serve different language variants of a page, which in itself is a bad idea. If this is the case, you don’t want to block search engines from crawling those variants via robots.txt. You’ll need to instead look into implementing a viable URL structure to target multiple countries.

If you’ve worked through the list of URLs and confirmed that the only pages using URL parameters are those causing duplicate content issues, I’d suggest adding the above command to your website’s robots.txt file.

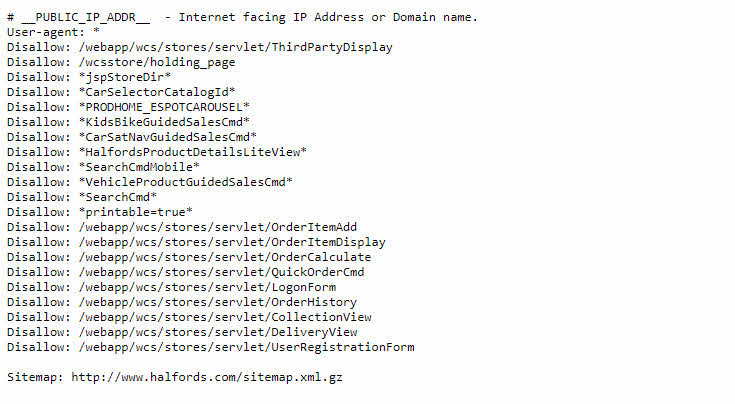

The image below shows Halfords robot.txt file when we first looked at the issues. At this point none of the URL parameter were blocked.

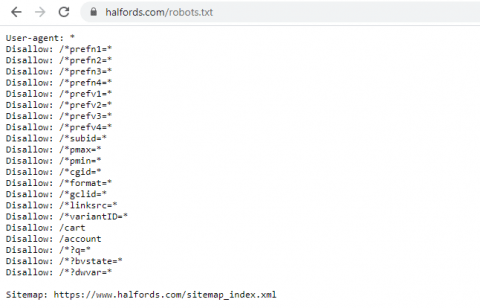

If we look at the current robots.txt file for this site it shows that some of the parameters have been blocked.

Conclusion

Using a faceted navigation can prove extremely useful for consumers looking for specific products within your website, but you need to ensure that any URLs generated as a result of filters being applied don’t hold back the performance of your original category pages in organic search results.

While I have detailed three of the most common fixes for URL parameters, every website platform is a little different, and you should, therefore, take the time to assess each situation on a case by case basis before jumping in and implementing any of the solutions I’ve described.

Have you just learned something new?

Then join the 80,000 people who read our expert articles every month.