This article is designed to help web professionals looking to maximise Core Web Vital scores, or to troubleshoot performance issues.

Achieving 100% overall performance scores on desktop and mobile for our WordPress sites has taken us many years of iterations. It is not easy. This advice, compiled from years of experience our multi-disciplined delivery team have in building fast websites for our clients, can apply to any CMS platform but has a WordPress focus as it is our preferred CMS platform.

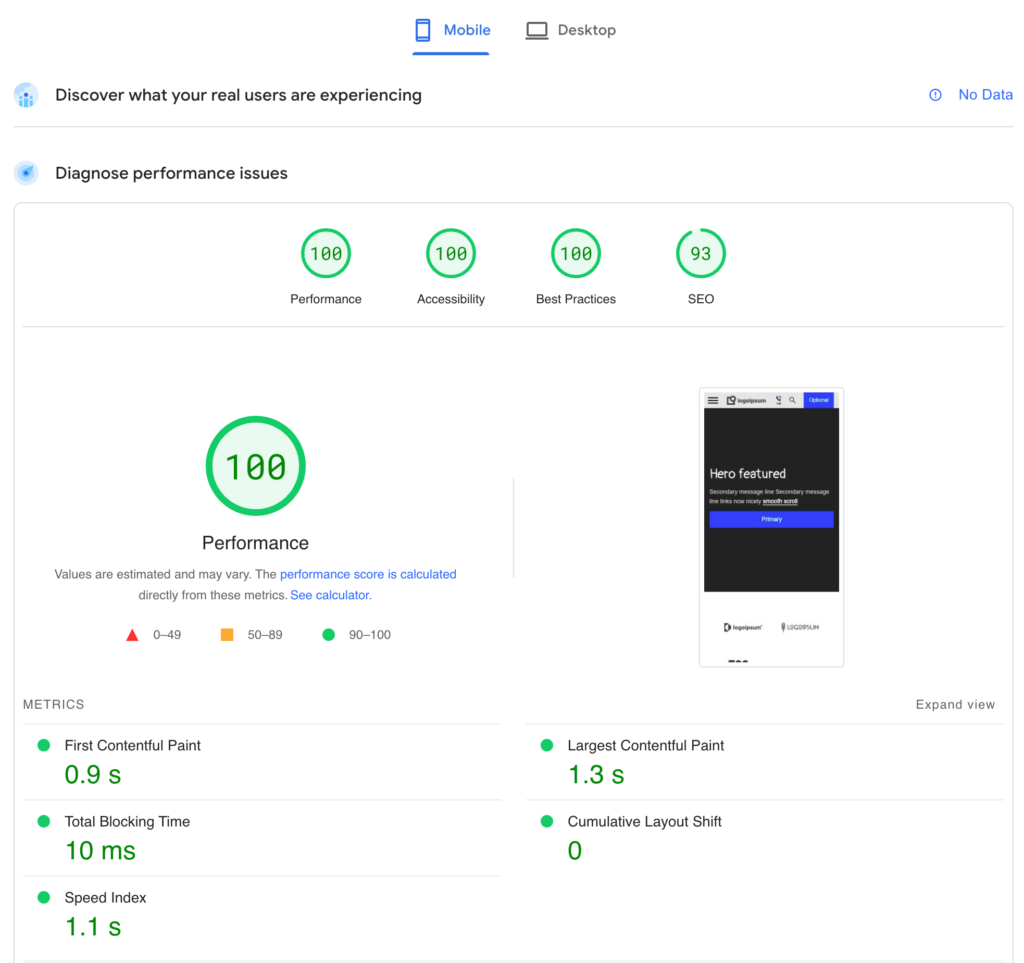

To measure performance, we use Lighthouse scoring via PageSpeed Insights which breaks down key metrics and provides an overall score for both mobile and desktop using a consistent environment on a lower end mobile device. We also use Chrome’s CrUX dashboard, which visualises real world performance data from actual user visits across all devices and locations.

Fast sites are important as they are part of Google’s Page Experience and ranking algorithm, can help reduce Cost Per Click through better quality scores in paid advertising, improve a website’s sustainability credentials but, perhaps most importantly, improve conversion rates due to a better user experience.

While aiming for 100% scores, my general recommendation is to also pay attention to CrUX data alongside the single percentage score Lighthouse provides as it is a better reflection of how your users experience performance on your site. For all our new site launches, we consistently see 95%+ of users experiencing ‘good’ Core Web Vitals across all devices within CrUX data and 90%+ mobile speed scores within PageSpeed Insights, and we can do this because of the measures we’ve put in place below.

Load critical assets within first 6 requests

When building a new site or addressing performance issues you will want to pay attention to the loading order of key files that are required to display above the fold content as it affects the First Contentful Paint FCP, Largest Contentful Paint LCP and Speed Index metrics.

Critical assets required for above the fold display will usually include:

- The main stylesheet CSS for your website’s above the fold CSS, unless it is inlined (more on this later)

- The font .woff2 file(s) required for above the fold font display unless you’re using ‘web safe’ fonts

- The LCP image, if the largest above the fold element is an image rather than text.

Note: videos don’t count and are ignored as the LCP element

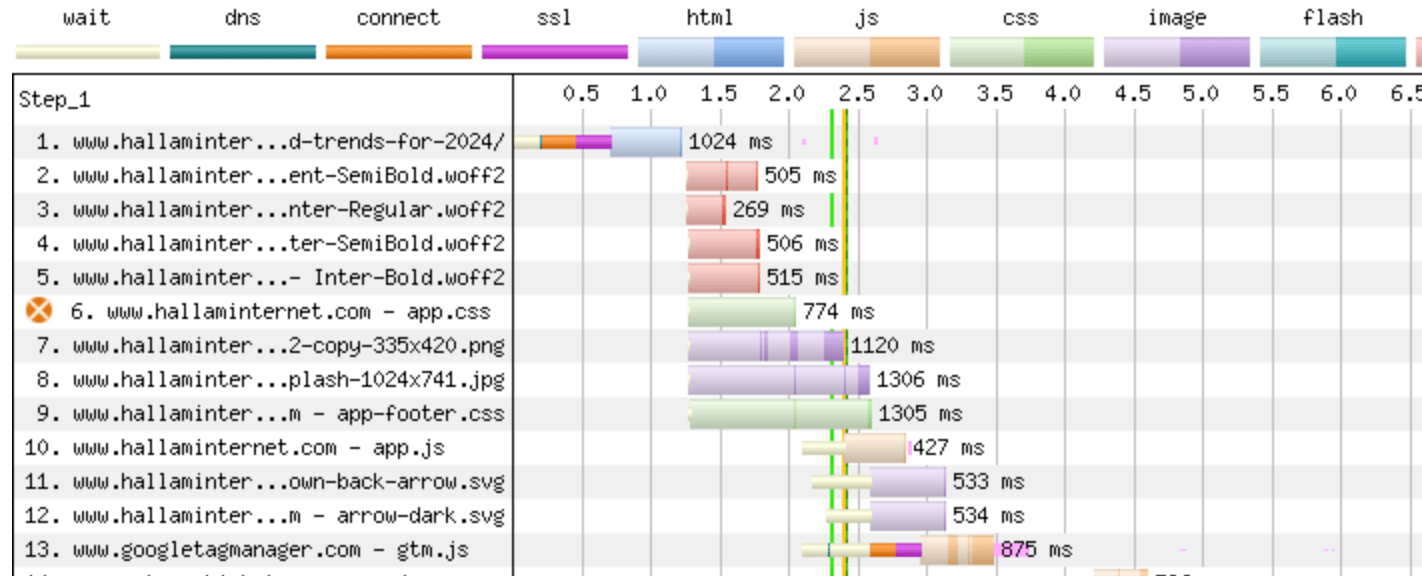

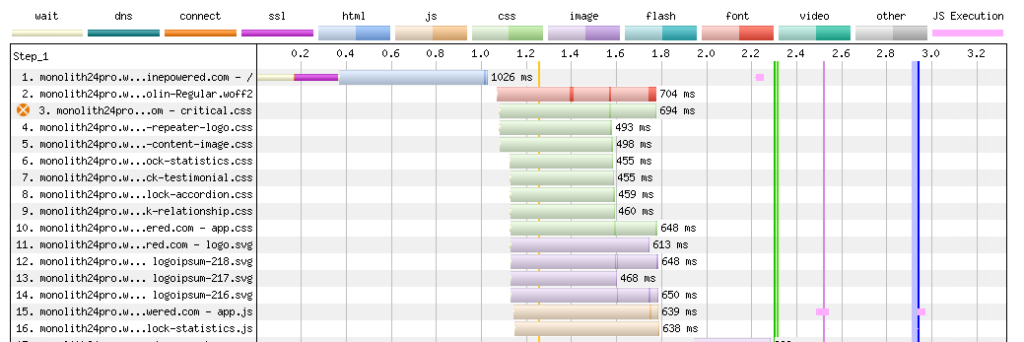

The first six requests are important due to http/2 which allows 6-8 files to be requested concurrently from the hosting server and should be available on all modern hosting environments. You can see http/2 in action on the below waterfall diagram generated by webpagetest.org where the LCP image, main stylesheet css file and key .woff2 font files are requested virtually at once in the initial 8 requests.

This is followed by staggered requests for less important assets that can afford a small delay such as the main site’s app.js file, the GTM script etc

There are many reasons why your critical assets might not be loaded in the first few requests. Plugins for example may add additional render blocking scripts or styles, you may have mega menu images loading before an LCP hero image, or your site may rely on JavaScript to render fonts.

Whatever techniques are used to render the content, it’s important to keep coming back to the focus on critical assets, which can be optimised by:

1. Preloading fonts

You should preload any above the fold .woff2 font files using the below <link> tag within the <head> element of your site so the fonts can be downloaded in advance of whatever stylesheet or CSS they are being requested from:

<link rel=”preload” href=”https://www.example.com/full-path-to-font-folder/web-font.woff2″ as=”font” type=”font/woff2″ crossorigin>

Fonts are usually served faster too when they are hosted directly on the server rather than any third party font foundry such as TypeKit as it avoids the need to make additional DNS and SSL connections to a different domain.

Remember too to use the font-display: swap; rule to allow web fonts to be displayed quickly with a font fallback allowance if retrieval of the font file is delayed.

2. Add a high fetch priority to LCP images

It is important to ensure your largest above the fold image, especially if the image is the LCP element, is the first image that is requested by the browser even ahead of the site’s main logo.

The recent fetchpriority attribute which has good browser support should be added alongside an ‘eager’ loading attribute to the image tag to prioritise the request order of the above the fold LCP image:

<img fetchpriority=”high” loading=”eager” …>

All other below the fold or offscreen images should be lazy loaded using loading=”lazy”, without the fetchpriority attribute.

3. Use critical chaining information to identify opportunities

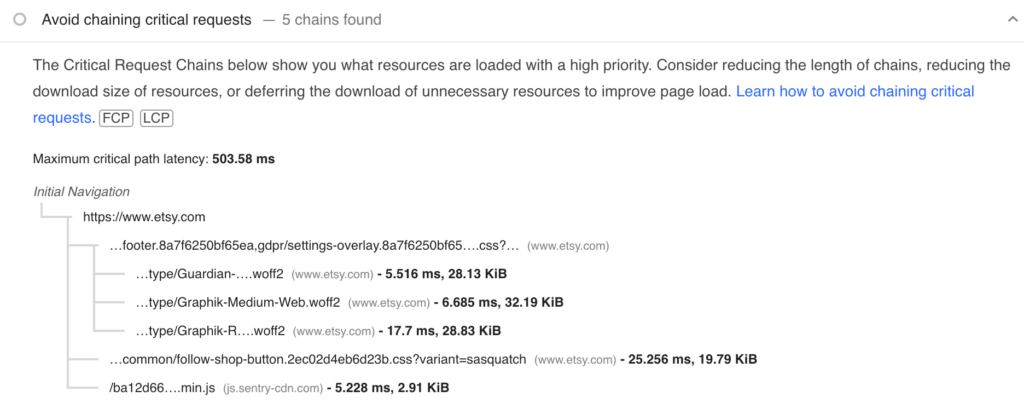

When running a Lighthouse or PageSpeed Insights report, look out for the ‘Avoid chaining critical requests’ diagnostic section. If font’s aren’t preloaded, you’ll likely see a critical request chain showing the root of the site and the CSS where the fonts are requested from.

If you’re not deferring JS or CSS, or inlining critical CSS (more details later on), you’ll see these CSS and JS file(s) within this critical chain. Aim for as few as files as possible in the critical chain, with the gold standard target having zero chained requests.

Streamline delivery of JavaScript

JavaScript is used on virtually every website from adding simple menu hover effects through to the generation of entire websites and is key to bringing interactivity and engagement along with business intelligence tools like tracking and analytics.

Within the Lighthouse scoring mechanism, JavaScript will often have a direct impact on the Total Blocking Time TBT which accounts for 30% of overall scores, but also has an indirect impact on other metrics too; if the CPU is tied up executing scripts as soon as a user hits a page, this can delay rendering and in turn, the LCP and Speed Index.

Any JavaScript that takes longer than 50ms to execute is considered a ‘long’ task as it will block the main thread from executing anything else. For top scores, the Total Blocking Time of all the combined JavaScript should be 60ms or less, which barely gives any headroom at all for complex scripts.

When thinking about any JavaScript usage on a site, it’s important to consider all third party tools used on the site, if JS is needed for above the fold content display and the role of JS frameworks in your tech stack.

1. Audit your scripts

Understanding which scripts have the biggest impact and cause the worst of the performance bottlenecks is the first step to fixing them. The tools below can help quickly identify and visualise CPU intensive scripts.

Lightouse script breakdown

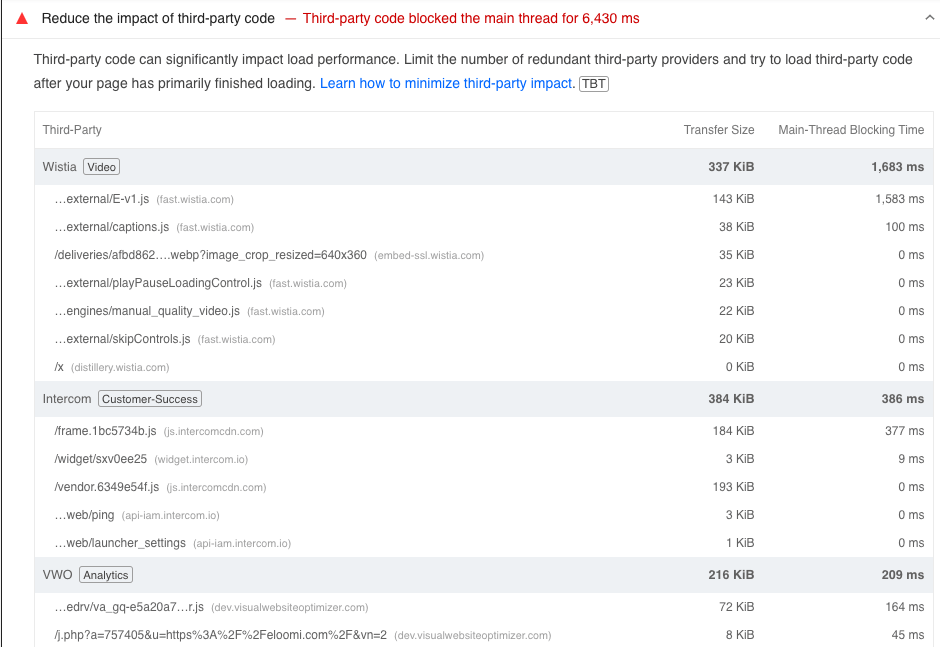

When troubleshooting performance issues caused by scripts (you’ll see this in both high TBT and often LCP values), I usually start with the scripts flagged in the ‘Reduce JavaScript execution time’ and ‘Reduce the impact of third-party code’ which highlights the most impactful scripts at the top.

You can see from the below example site a large amount of thread blocking time from several Wistia video embed scripts, live chat (Intercom) and VWO analytics. Considering top scores are only achieved with 60ms or less of Total Blocking Time, the use of any one of these scripts will likely start to lower scores.

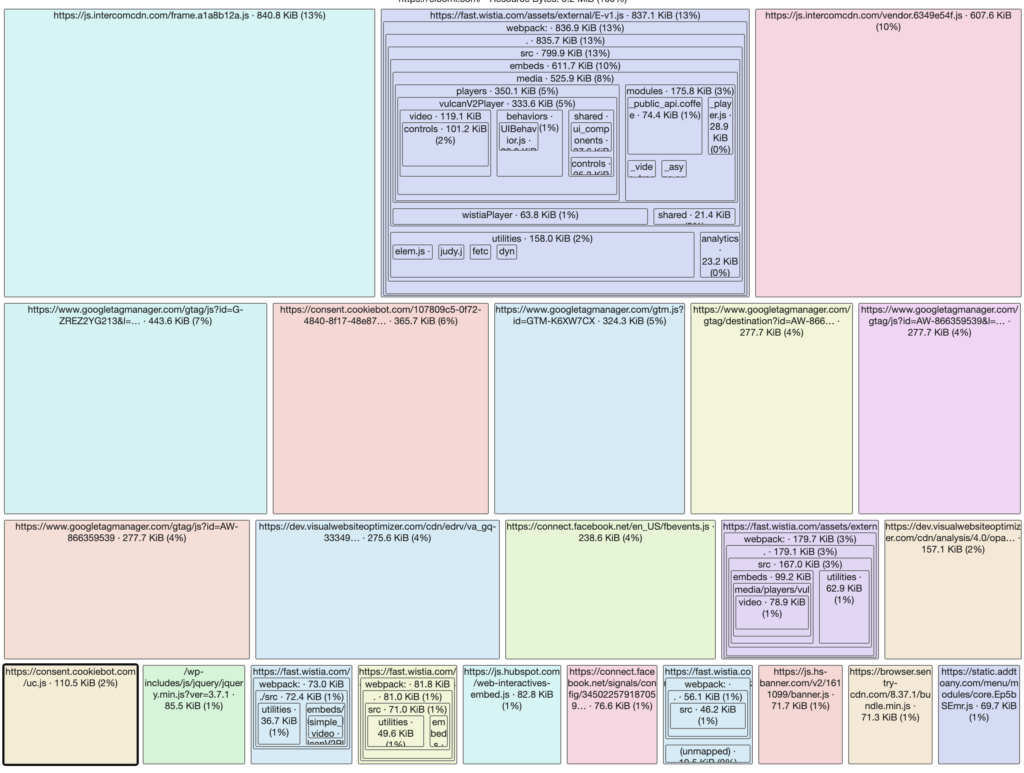

Lighthouse Treemap

The impact of scripts can be visualised nicely in the Treemap view, also accessible from PageSpeed Insights. Scripts are ordered by filesize, with the largest scripts taking up the biggest boxes, but this doesn’t necessarily correspond to the scripts that take longest to execute.

In the example below, it’s easy to see the largest scripts are the live chat, Wistia video embed, several GTM scripts followed by the VWO A/B testing tool.

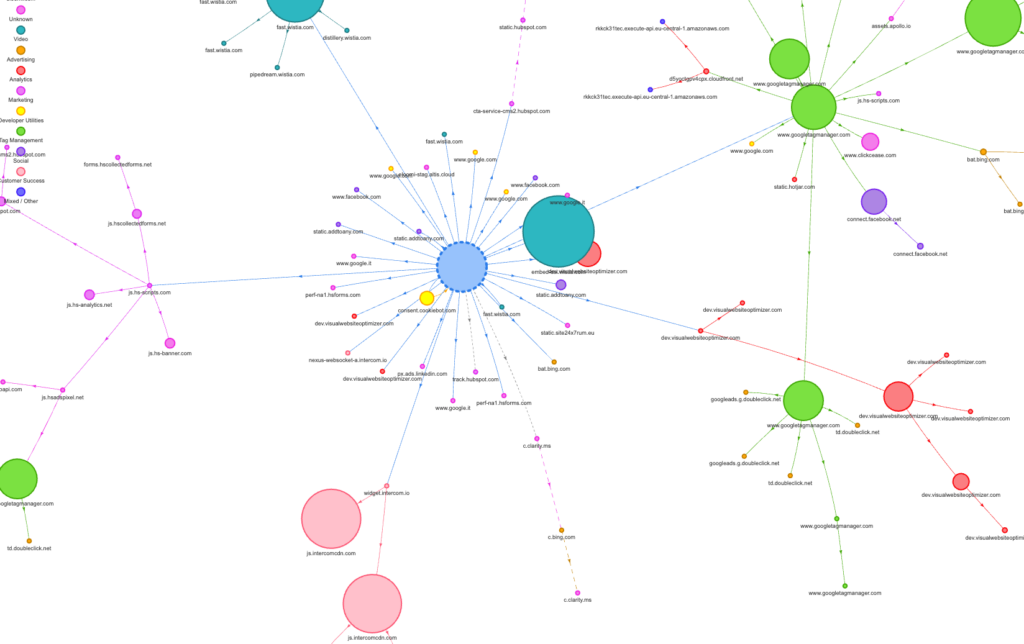

Visualisation of third party scripts

For a more detailed visualisation into requests from third party domains (usually, but not always JS scripts), I often use this mapping tool which displays requests as nodes and the connections between them, with larger nodes representing larger files and generally speaking, larger nodes and a greater number of nodes will be worse for performance.

The interconnection of nodes can also help determine which scripts are called from which source and the colour coding shows the different types of request.

The below diagram shows the requests from the same example site, with multiple and larger nodes for several GTM requests (in green), live chat and VWO (in shades of red), Wistia (teal) and various HubSpot scripts (magenta).

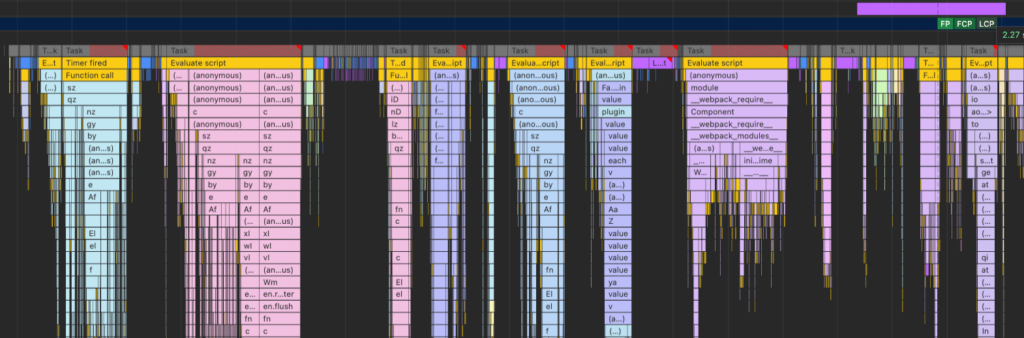

Chrome’s Performance Monitor

If it’s not obvious from the above tools exactly what scripts are causing the biggest performance bottlenecks, taking a deeper dive using Chrome’s Performance Monitor can provide more useful insights such as:

- Which scripts are executing before the first and largest paints (FCP and LCP shown below); it’s important to minimise the JS executed before FCP and LCP where possible

- Which scripts (highlighted in yellow) take the longest to execute

- What are the ‘long’ tasks (shown with the red diagonal lines) i.e. tasks that take longer than 50 milliseconds will block the main thread and add to TBT

- What functions make up long tasks which can be drilled into below each top level JS task

Long tasks can also impact the Interaction to Next Paint INP metric too. If for example a user interacts with the hamburger menu which needs a bit of JS to fire but the CPU is tied up executing other JavaScript, there will be a greater delay before the interaction can be executed.

2. Find the right balance with business intelligence tools

Invariably business requirements for tracking and analytics will often mean third party tools such as Google Analytics, Hotjar, Google Tag Manager, VWO etc will be loaded globally when users hit any page on the site. Sometimes there’s little that can be done to avoid the impact of third party scripts, but there’s plenty of options to minimise impact on performance.

- Do you need A/B testing tools and heatmap tracking running 24/7? These can be disabled when enough data has been gathered

- Try to minimise third party platforms that have similar functions running at the same time

- Implementation of cookie blocking GDPR cookie banners can actually help performance, as they will usually block cookie setting scripts up front

- Look at combining multiple GTM accounts into a single account

- Using server side rendering of GTM scripts can help negate performance impact of GTM scripts

- Defer scripts and tags within GTM to execute when the page is loaded (more below)

Using a few of these tools is likely to mean 100% scores are not achievable, but that doesn’t mean it is going to have anything other than a negligible impact on user experience and organic rankings. The above pointers should help, and keep an eye on CrUX data too which provides a more meaningful indicator of how users experience performance.

3. Conditionally load scripts when needed

For on-site scripts that can be controlled, look at conditionally outputting the script request only when they are needed.

Common scripts that can be loaded on demand include:

- embeds such as YouTube or Wistia videos or HubSpot form embeds. If the embeds are displayed below the fold or in offscreen modals, the scripts or parent iframes can be lazy loaded with the relevant script(s) only loading as the video is scrolled or appears in the viewport

- Live chat scripts are often heavy and ideally these would not execute globally and sit on some kind of dedicated contact / support page. If that’s not feasible, consider triggering the live chat widget once the user has interacted with the page rather than on load, such as a scroll action.

- ReCaptcha scripts and other JS associated with forms should only be output on pages with a form.

- Componentise and execute scripts as far as possible. For example, if you have an animated infographic, only load and execute the JS not just on the pages with that component, but when that component is in the viewport.

4. Do not use client-side JavaScript for content generation

The proliferation of powerful JavaScript platforms like React or Next.js, alongside modern CMS platforms that utilise these JS frameworks have brought with them an increase use by developers to utilise these tools in a way that means website content and underlying HTML generation is done via Client Side Rendering CSR in the browser, rather than by the server.

If your website content is not visible with JavaScript disabled, then you have a problem. From a performance point of view, a reliance on CSR will mean associated scripts need to be downloaded, parsed, and executed by the browser which will almost always result in a greater impact on FCP, LCP and TBT metrics vs sending resulting markup direct to the browser by the server.

This is especially true for lower end devices where additional resources are required both for both bandwidth and CPU processing. From an SEO point of view this is also worse because various search engine crawlers will either have difficulty or delays in crawling JS generated markup.

If you’re using a JS framework or CMS that relies on JS to render content, make sure to use Server Side Rendering SSR or Static Site Generation SSG to deliver resulting HTML direct to the browser.

5. Use above the fold JS sparingly

Even if you’re not using JavaScript extensively, make sure JS is not used in a way that can delay the appearance of the above the fold elements.

A fading animated effect on a hero unit for example might seem visually appealing, but these kind of animations could add valuable seconds to your FCP, LCP or Speed Index metrics which collectively account for 45% of overall Lighthouse scores.

Carousels too, which are almost always JS driven, are a common pitfall that can impact performance if not dealt with correctly. If you must use a Carousel (we often advise against from a user perspective), then you should make sure the first slide can be rendered with JavaScript disabled, and use CSS to style the first slide in a way that avoids any layout shifting when the carousel JavaScript has rendered the full slider.

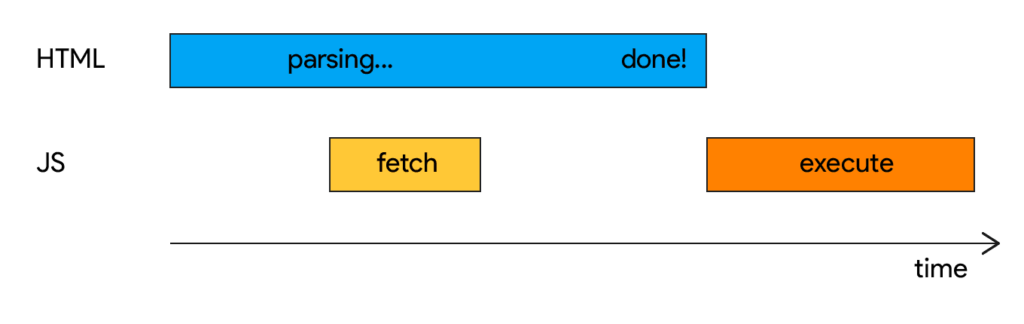

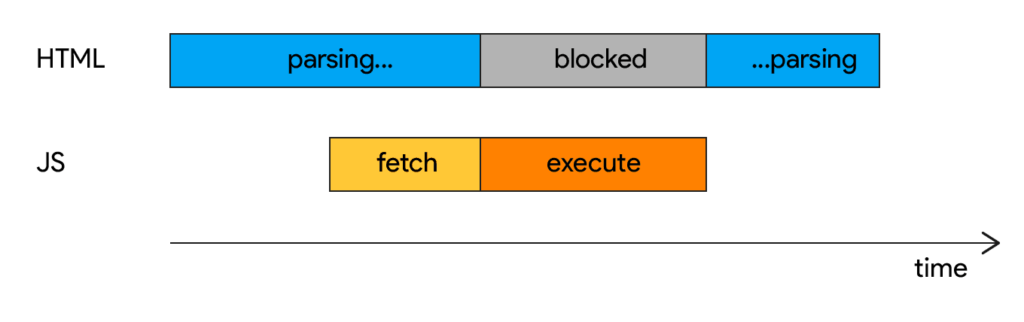

6. Defer scripts where possible, async if necessary

Standard requests to JS files, even if they are the last requests in the HTML, can still interrupt the rendering of a page as HTML parsing will be completely paused until the script has fully downloaded.

Any script not required for critical above the fold display should be deferred with the defer attribute, allowing the JS file to be downloaded but not executed until the HTML has fully downloaded and parsed.

The async attribute on the other hand does block HTML parsing, but still allows files to be downloaded concurrently. Use async for more important JS files that need to execute earlier in the process, whilst making every effort to avoid JS for above the fold rendering.

For best performance and to minimise the total blocking time, aim to defer as many scripts as possible including analytics and third party scripts and everything below the fold.

For scripts setup as tags within GTM, use the ‘Window loaded’ page view trigger as far as possible, which defers loading until HTML has been parsed and rendered.

7. Do you really need a JS library?

If you really want to go after the very top scores, it’s worth considering if you really need a JS library or framework at all. Any front end facing .js file sent to the browser is going to need downloading, parsing and executing which, depending on how it is loaded, will likely impact CWV in different ways.

If the JS file is within the <head> element it will be render blocking. If it’s outside the <head>, unless it is deferred, it could tie up the CPU and impact the TBT metric which in turn could interrupt the rendering of the above the fold elements impacting LCP or Speed Index.

As part of our drive for top speed scores, we don’t rely on any third party JS library including jQuery, animation libraries or otherwise and instead favour native ES6 JavaScript which is constantly evolving with greater capabilities.

We’ll often use JavaScript for parallax, or carousel style animations and interaction but try to keep this to a minimum if CSS animations can be used instead such as focus and hover transitions.

Optimise output of CSS

1. Use a CSS framework you control

Off the shelf CSS frameworks such as Bootstrap or Foundation can provide a convenient and consistent way to build out visual components easily using a bunch of pre-configured styles. Out of the box however, there will usually be performance hit due to additional CSS files, CSS selectors or CSS overrides you may need to apply.

When choosing a CSS framework, it is important to consider the below:

- Are there any additional render blocking styles output in the <head> of the site? At most you want a single CSS file in the <head>

- Is all CSS provided by the framework minified? This is a must have.

- How much CSS overrides are likely required? If you’re fighting a lot of default styles with several overrides, this can quickly bloat your CSS as well as making it harder to manage in future.

- Do you need to add a lot of selectors at HTML level? HTML bloat is an important performance factor too and you want to avoid several duplicated CSS selectors within your HTML markup as far as possible.

- Is it possible to strip out CSS classes that are not being used anywhere on the site? Having hundreds of unused CSS selectors will be a big performance hit.

- Is it possible to generate and output CSS at page level only for the blocks and components used on that page? Global CSS not required at page level will require unnecessary bandwidth and processing.

- Is it possible to split out critical CSS required for above the fold content and defer everything else? Minimising CSS loaded in the <head> will speed up FCP, LCP and Speed Index metrics.

- Does the file size of the minified and compressed above the fold CSS come within 14KB on the server when combined with any overrides? 14KB is the threshold for effective inlining of critical CSS

It is unlikely most CSS frameworks will fulfil all of the above criteria. For these reasons, we do not use a CSS framework and favour a more custom approach using SASS and compiled CSS as described below.

2. Separate above the fold, global and component level CSS

Our base theme utilises SASS partials, that map to blocks and components utilised by our underlying design system. These partials are compiled and output so that:

- There is a single CSS file deals with all global above the fold content, which includes header elements, navigation and hero units.

- Individual CSS files are loaded at block level, outside the <head>, only on pages that use specific individual blocks

- All remaining below the fold global CSS is taken care of by a separate CSS file loaded outside the <head>. This includes footer navigation, global footer CTA units and off screen elements such as hamburger and drop down navigational styles.

The below the fold CSS should then be deferred by loading the style sheets asynchronously, otherwise the CSS outside the <head> will still be considered as render blocking.

Loading CSS on demand this way reduces critical chains, eliminates the ‘Removed unused CSS’ warning in lighthouse and reduces CPU execution as there are less selectors for the browser to parse.

Naturally CSS minification is a must too, but most modern build processes will automate the process of reducing CSS (and JS) file sizes through minifying by stripping out all unnecessary characters and comments etc.

4. Remove render blocking CSS by inlining critical

If you have streamlined, minified and split out your CSS and have minimal or zero additional CSS loaded through add ons, you may be able to inline any remaining above the fold CSS.

Inlining critical CSS involves outputting CSS directly in <style></style> tags within your HTML rather than making a request to a CSS file, avoiding an additional request and allowing elements to be rendered faster. If the CSS file is under 14KB when compressed on the server, you should benefit from faster render times, with key metrics for FCP and LCP being positively impacted.

This step is usually one of hardest to achieve in the quest for top performance; the vast majority of websites and the majority of CMS platforms will almost always have some level of render blocking CSS. This has been something we have only been able to achieve thanks to the amount of customisation we have made to our site builds on top of the open source nature of WordPress that supports these modifications.

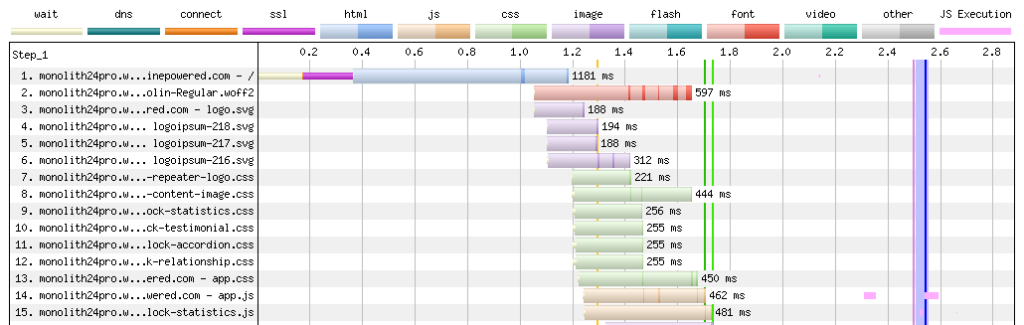

The difference inlining critical CSS can make can be demonstrated with the below waterfall diagram from WebPageTest, simulating a low end device from a 4g connection.

In the first waterfall chart you can see the render blocking critical.css file as the 3rd request. Here, the all important ‘start render’ and FCP vertical green lines appears at the 2.3 second mark.

But with the critical CSS file (~8KB compressed) inlined, the difference when running the same test is significant, as the start render and FCP timings are over 500ms faster. This is despite an additional ~100ms on the Time to First Byte due to the slightly larger HTML containing the inlined CSS.

Generate images responsively

(and responsibly)

Poorly optimized images can have a big impact on performance and core web vitals, especially for images that appear above the fold. Best practices around images for performance also go hand in hand with better accessibility and technical SEO factors.

1. Use the most appropriate image file type

Web supported images (newer formats like .webp aside) are almost always JPG, PNG, GIF or SVG. Each have their own advantages and use cases and it’s important for any content managers to understand the differences to avoid uploading unnecessarily large images which will impact on performance.

JPG images

Unlike the other formats, JPGs are ‘Lossy’ meaning a much greater compression is possible due to data being deleted from the file. JPGs are most appropriate for photographs or images with lots of colour variation and should be considered the default when exporting from design platforms. Exporting at a compression rate of around 60% will allow for much smaller file sizes, usually without any real perceivable loss of quality.

Do not use JPG images for icons or simple images with little colour variation as pixellation and artefacts will be more obvious when compressed.

PNG images

PNG images are not lossy, so they are generally much larger in size compared to JPGs. They are not appropriate for photographs, but work well with line art and simpler graphics with limited colours, and especially if transparency is needed.

SVG images

Unlike the other three file types, SVG images are vector based so they can be enlarged to any screen size without loss of quality due to the nature of curve’s and shapes being encoded as calculations rather than by pixel. On websites, SVGs work best for icons and simpler logos which will appear sharp, especially on retina devices with high pixel density.

Keep an eye on the file size when using SVGs for more complex graphics as a PNG may be more suitable, such as a logo with a complex shape.

GIF images

Outside of memes and social media, GIFs are rarely used on the web these days as their range of colours is low and file sizes are large and usually animation is delivered via CSS, JS or videos instead.

New file formats

Newer image formats such as WebP and AVIF and are gaining in popularity and browser support. With the right hosting provider, service (such as Cloudflare Polish, available with WPEngine) or a plugin, it’s possible for these file formats to be generated automatically from the underlying JPG or PNG file, avoiding the need to manually uploaded additional file types.

2. Use proper <img> tags and responsive srcset

One thing I often observe when auditing performance on third party sites is a tendency for developers to output non decorative images inline using background-image CSS. This usually means the desktop sized image is served on large and small screen devices, or JS is used to swap the image at different breakpoints which invariably means delays to image rendering while a large image is downloaded or JS is executed, especially if the image is the LCP element.

The most effective way to serve responsive images at different sizes is by using the srcset attribute on the <img> tag, with different sized versions of the image defined for different breakpoints.

The use of the <img> tag is also important as it allows other native image controls such as fetchpriority and loading attributes to be declared along with alt text which is good for both accessibility and SEO.

3. Specify image dimensions to avoid layout shifting

<img> tags either need a default width and height attribute, or have their ratio specified using the aspect-ratio CSS property.

This is important as it allows the browser to allocate the area required for the image, otherwise the browser will need to download the image first before it knows the image dimensions which will likely result in a layout shift once image has downloaded and sizes can be calculated.

4. Automate compression

Depending on your CMS platform of choice, there are plenty of image optimisation plugins available that will automatically compress images, strip out unnecessary meta data etc as the image is uploaded taking the hassle out of manual compression.

Tools like Smush, ReSmush.it and Tinypng.org are good options for WordPress and if your hosting provider does not support conversion to .webp, look out for add ons that can convert and deliver to the newer .webp file format too which offers even greater compression.

5. Lazy load all below the fold and off screen images

Another reason to use the <img> tag is the loading and fetchpriority attributes are supported (covered earlier) which allows all above the fold important images to be loaded faster, and images that sit outside the viewport to be loaded lazily.

Minimise use of plugins and embeds

Plugins, add ons and extensions regardless of CMS platform used can often provide a quick and easy way to add additional features and functionality to a site. If you are not monitoring the impact of each new plugin however, there is a high chance that performance can be negatively impact, even by using some of the more popular and trusted plugins that add features to the front end.

If you are a developer building custom themes, you should consider if you really need a plugin in the first place and limit where possible plugins that output additional assets on the front end wherever possible. Sometimes plugins make a lot of commercial sense, sometimes they are unavoidable, but impact can be minimised, or at least better understood with the below tips:

- Determine performance impact. Use tools like PageSpeed Insights and Web Page Test to take an average performance reading before and after plugin install. Look at individual scores for each metric too, not just overall score as plugins can impact CWV in a variety of ways. For example if they add scripts are styles to the <head> element this will affect FCP, LCP and Speed Index. Frequently JavaScript output by plugins will affect Total Blocking Time which can have a knock on effect on LCP

- Get an estimate from a developer. Depending on the complexity of the new feature/function, the benefit of investing in some custom development may outweigh potential performance impact

- Check if the plugin outputs additional CSS or JS in the <head>. If it does, it might be possible to move / defer these scripts outside the head programatically. In WordPress, functions including wp_dequeue_script() and wp_enqueue_script() may allow you to target specific assets, potentially conditionally loading assets only on required pages, without needing to modify plugin files directly

Choose a decent hosting provider

Sometimes, even with the most optimised and streamlined websites, a slow host can cost your users valuable seconds waiting for web pages. Features to look out for with hosting providers include:

- An infrastructure tailored specifically for the WordPress platform rather than a generalised provider

- Global data centers and CDN delivery

- Full page cached HTML CDN delivery, rather than CDN delivery of linked assets

- Automatic serving of .webp images on the fly through a service such as CloudFlare Polish

- Server configured with text compression and browser caching

- Object & Page caching for ‘Headless’ equivalent delivery of HTML and other caching options such as more aggressive caching on pages infrequently updated

- Hosting environment supports http/2 delivery

- Scalable architecture

Our clients all benefit from the above and more when they use hosting provided by our recommended WordPress hosting provider WPEngine.

Collaboration is key

Responsibility for website performance is one that should be shared across several disciplines, even if the majority of the implementation is owned by the development team. I would always encourage collaboration early on during the project lifecycle, as well as general discussions with both delivery teams and stakeholders around your performance goals and what compromises (if any) need to be made.

For example:

- Discuss any CWV or technical SEO challenges (which usually go hand in hand with performance) with the SEO team who will have valuable insights and perspectives. And be open to change, even if that means shining a spotlight on the underlying tech stack

- Form components will usually require heavy validation or anti spam scripts such as Google’s ReCaptcha. The UX or design team may want to avoid forms in the footer in favour of contact CTA’s directing the user to a dedicated form page

- Share a staging link for new websites with your SEO team well in advance of launch for them to audit and advise on CWV

- Locally hosted fonts on the web server should usually perform better than requests to font foundries, and font platforms may not allow .woff2 downloading such as Typekit. Google Fonts that can be downloaded can be a good option for the UI designers to consider

- Discuss the compromises that might need to be made with your marketing/sales/data teams (or the client) on any additional third party business intelligence tools that will often require heavy JS

We would not have been able to achieve 100% mobile performance scores as developers by approaching this challenge alone. A deeper understanding and collaboration with other teams will give you a better chance at getting those super fast Core Web Vitals.

Summary

Fast websites are essential for delivering great user experiences and improving conversion rates and in today’s competitive digital landscape, can give the all important edge with Google’s Page Experience ranking factors.

Key actionable recommendations to achieve top Core Web Vital and performance scores include optimising the loading of critical assets, streamlining JavaScript execution and deferring non-essential scripts to reduce Total Blocking Time (TBT), while careful use of CSS ensures faster rendering and minimises unused styles. Additionally, responsive image optimisation, responsible use of plugins, and robust hosting infrastructure all play critical roles in boosting site performance.

When it comes to WordPress as a CMS, contrary to certain viewpoints, it is not inherently slow. But there are many poor performing websites out there, largely due to poorly built WordPress themes, plugins and site builders. The open source nature of WordPress on one hand allows every line of code to be customised; a key attribute for a CMS platform that allows for the very highest scores, but on the other has been detrimental in the sense that it easily enables the proliferation of poor performance practices within the ecosystem.

By implementing the best practices above, it is possible to achieve fast, high scoring websites that not only give you a better chance at higher rankings but deliver a better experience for real-world users. Whether you’re building a new site or refining an existing one, prioritising speed and user experience will help ensure your website stands out in an increasingly demanding online environment.